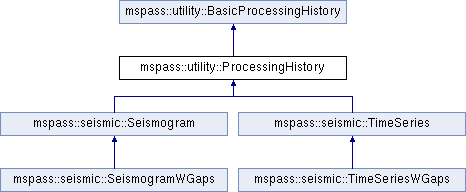

Lightweight class to preserve procesing chain of atomic objects. More...

#include <ProcessingHistory.h>

Public Member Functions | |

| ProcessingHistory () | |

| ProcessingHistory (const std::string jobnm, const std::string jid) | |

| ProcessingHistory (const ProcessingHistory &parent) | |

| bool | is_empty () const |

| bool | is_raw () const |

| bool | is_origin () const |

| bool | is_volatile () const |

| bool | is_saved () const |

| size_t | number_of_stages () override |

| Return number of processing stages that have been applied to this object. | |

| void | set_as_origin (const std::string alg, const std::string algid, const std::string uuid, const AtomicType typ, bool define_as_raw=false) |

| std::string | new_ensemble_process (const std::string alg, const std::string algid, const AtomicType typ, const std::vector< ProcessingHistory * > parents, const bool create_newid=true) |

| void | add_one_input (const ProcessingHistory &data_to_add) |

| Add one datum as an input for current data. | |

| void | add_many_inputs (const std::vector< ProcessingHistory * > &d) |

| Define several data objects as inputs. | |

| void | merge (const ProcessingHistory &data_to_add) |

| Merge the history nodes from another. | |

| void | accumulate (const std::string alg, const std::string algid, const AtomicType typ, const ProcessingHistory &newinput) |

| Method to use with a spark reduce algorithm. | |

| std::string | clean_accumulate_uuids () |

| Clean up inconsistent uuids that can be produced by reduce. | |

| std::string | new_map (const std::string alg, const std::string algid, const AtomicType typ, const ProcessingStatus newstatus=ProcessingStatus::VOLATILE) |

| Define this algorithm as a one-to-one map of same type data. | |

| std::string | new_map (const std::string alg, const std::string algid, const AtomicType typ, const ProcessingHistory &data_to_clone, const ProcessingStatus newstatus=ProcessingStatus::VOLATILE) |

| Define this algorithm as a one-to-one map. | |

| std::string | map_as_saved (const std::string alg, const std::string algid, const AtomicType typ) |

| Prepare the current data for saving. | |

| void | clear () |

| std::multimap< std::string, mspass::utility::NodeData > | get_nodes () const |

| int | stage () const |

| ProcessingStatus | status () const |

| std::string | id () const |

| std::pair< std::string, std::string > | created_by () const |

| NodeData | current_nodedata () const |

| std::string | newid () |

| int | number_inputs () const |

| int | number_inputs (const std::string uuidstr) const |

| void | set_id (const std::string newid) |

| std::list< mspass::utility::NodeData > | inputs (const std::string id_to_find) const |

| Return a list of data that define the inputs to a give uuids. | |

| ProcessingHistory & | operator= (const ProcessingHistory &parent) |

Public Member Functions inherited from mspass::utility::BasicProcessingHistory Public Member Functions inherited from mspass::utility::BasicProcessingHistory | |

| BasicProcessingHistory (const std::string jobname, const std::string jobid) | |

| BasicProcessingHistory (const BasicProcessingHistory &parent) | |

| std::string | jobid () const |

| void | set_jobid (const std::string &newjid) |

| std::string | jobname () const |

| void | set_jobname (const std::string jobname) |

| BasicProcessingHistory & | operator= (const BasicProcessingHistory &parent) |

Public Attributes | |

| ErrorLogger | elog |

Protected Attributes | |

| std::multimap< std::string, mspass::utility::NodeData > | nodes |

Protected Attributes inherited from mspass::utility::BasicProcessingHistory Protected Attributes inherited from mspass::utility::BasicProcessingHistory | |

| std::string | jid |

| std::string | jnm |

Detailed Description

Lightweight class to preserve procesing chain of atomic objects.

This class is intended to be used as a parent for any data object in MsPASS that should be considered atomic. It is designed to completely preserve the chain of processing algorithms applied to any atomic data to put it in it's current state. It is designed to save that information during processing with the core information that can then be saved to define the state. Writers for atomic objects inheriting this class should arrange to save the data contained in it to history collection in MongoDB. Note that actually doing the inverse is a different problem that are expected to be implemented as extesions of this class to be used in special programs used to reconstrut a data workflow and the processing chain applied to produce any final output.

The design was complicated by the need to keep the history data from causing memory bloat. A careless implementation could be prone to that problem even for modest chains, but we were particularly worried about iterative algorithms that could conceivably multiply the size of out of control. There was also the fundamental problem of dealing with transient versus data stored in longer term storage instead of just in memory. Our implementation was simplified by using the concept of a unique id with a Universal Unique IDentifier. (UUID) Our history mechanism assumes each data object has a uuid assigned to it on creation by an implementation id of the one object this particular record is associated with on dependent mechanism. That is, whenever a new object is created in MsPASS using the history feature one of these records will be created for each data object that is defined as atomic. This string defines unique key for the object it could be connected to with the this pointer. The parents of the current object are defined by the inputs data structure below.

In the current implementation id is string representation of a uuid maintained by each atomic object. We use a string to maximize flexibility at a minor cost for storage.

Names used imply the following concepts: raw - means the data is new input to mspass (raw data from data center, field experiment, or simulation). That tag means no prior history can be reconstructed. origin - top-level ancestor of current data. The top of a processing chain is always tagged as an origin. A top level can also be "raw" but not necessarily. In particular, readers that load partially processed data should mark the data read as an origin, but not raw. stage - all processed data objects that are volatile elements within a workflow are defined as a stage. They are presumed to leave their existence known only through ancestory preserved in the processing chain. A stage becomes a potential root only when it is saved by a writer where the writer will mark that position as a save. Considered calling this a branch, but that doesn't capture the concept right since we require this mechanism to correctly perserve splits into multiple outputs. We preserve that cleanly for each data object. That is, the implementation make it easy to reconstruct the history of a single final data object, but reconstructing interlinks between objects in an overall processing flow will be a challenge. That was a necessary compomise to avoid memory bloat. The history is properly viewed as a tree branching from a single root (the final output) to leaves that define all it's parents.

The concepts of raw, origin, and stage are implemented with the enum class defined above called ProcessingStatus. Each history record has that as an attribute, but each call to new_stage updates a copy kept inside this object to simplify the python wrappers.

Constructor & Destructor Documentation

◆ ProcessingHistory() [1/3]

| mspass::utility::ProcessingHistory::ProcessingHistory | ( | ) |

Default constructor.

◆ ProcessingHistory() [2/3]

| mspass::utility::ProcessingHistory::ProcessingHistory | ( | const std::string | jobnm, |

| const std::string | jid | ||

| ) |

Construct and fill in BasicProcessingHistory job attributes.

- Parameters

-

jobnm - set as jobname jid - set as jobid

◆ ProcessingHistory() [3/3]

| mspass::utility::ProcessingHistory::ProcessingHistory | ( | const ProcessingHistory & | parent | ) |

Standard copy constructor.

Member Function Documentation

◆ accumulate()

| void mspass::utility::ProcessingHistory::accumulate | ( | const std::string | alg, |

| const std::string | algid, | ||

| const AtomicType | typ, | ||

| const ProcessingHistory & | newinput | ||

| ) |

Method to use with a spark reduce algorithm.

A reduce operator in spark utilizes a binary function where two inputs are used to generate a single output object. Because the inputs could be scattered on multiple processor nodes this operation must be associative. The new_ensemble_process method does not satisfy that constraint so this method was necessary to handle that type of algorithm correctly.

The way this algorithm works is it fundamentally branches on two different cases: (1) initialization, which is detected by testing if the node data map is empty or (2) secondary calls. This should work even if multiple inputs are combined at the end of the reduce operation because the copies being merged will not be empty. Note an empty input will create a complaint entry in the error log.

References current_nodedata(), get_nodes(), is_empty(), merge(), newid(), and mspass::utility::NodeData::stage.

◆ add_many_inputs()

| void mspass::utility::ProcessingHistory::add_many_inputs | ( | const std::vector< ProcessingHistory * > & | d | ) |

Define several data objects as inputs.

This method acts like add_one_input in that it alters only the inputs chain. In fact it is nothing more than a loop over the components of the vector calling add_one_input for each component.

- Parameters

-

d is the vector of data to define as inputs

References add_one_input().

◆ add_one_input()

| void mspass::utility::ProcessingHistory::add_one_input | ( | const ProcessingHistory & | data_to_add | ) |

Add one datum as an input for current data.

This method MUST ONLY be called after a call to new_ensemble_process in the situation were additional inputs need to be defined that were not available at the time new_ensemble_process was called. An example might be a stack that was created within the scope of "algorithm" and then used in some way to create the output data. In any case it differs fundamentally from new_ensemble_process in that it does not touch attributes that define the current state of "this". It simply says this is another input to the data "this" contains.

- Parameters

-

data_to_add is the ProcessingHistory of the data object to be defined as input. Note the type of the data to which it is linked will be saved as the base of the input chain from data_to_add. It can be different from the type of "this".

References current_nodedata(), get_nodes(), id(), is_empty(), and mspass::utility::ErrorLogger::log_error().

◆ clean_accumulate_uuids()

| string mspass::utility::ProcessingHistory::clean_accumulate_uuids | ( | ) |

Clean up inconsistent uuids that can be produced by reduce.

In a spark reduce operation it is possible to create multiple uuid keys for inputs to the same algorithm instance. That happpens because the mechanism used by ProcessingHistory to define the process history tree is not associative. When a reduce gets sprayed across multiple nodes multiple initializations can occur that make artifical inconsitent uuids. This method should normally be called after a reduce operator if history is being preserved or the history chain may be foobarred - no invalid just mess up with extra branches in the processing tree.

A VERY IMPORTANT limitation of the algorithm used by this method is that the combination of algorithm and algid in "this" MUST be unique for a given job run when a reduce is called. i.e. if an earlier workflow had used alg and algid but with a different jobid and jobname the distintion cannot be detected with this algorithm. This means our global history handling must guarantee algid is unique for each run.

- Returns

- unique uuid for alg,algid match set in the history chain. Note if there are no duplicates it simply returns the only one it finds. If there are duplicates it returns the lexically smallest (first in alphabetic order) uuid. Most importantly if there is no match or if history is empty it returns the string UNDEFINED.

References mspass::utility::NodeData::algid, mspass::utility::NodeData::algorithm, current_nodedata(), is_empty(), and mspass::utility::NodeData::uuid.

◆ clear()

| void mspass::utility::ProcessingHistory::clear | ( | ) |

Clear this history chain - use with caution.

◆ created_by()

|

inline |

Return the algorithm name and id that created current node.

◆ current_nodedata()

| NodeData mspass::utility::ProcessingHistory::current_nodedata | ( | ) | const |

Return all the attributes of current.

This is a convenience method strictly for the C++ interface (it too nonpythonic to be useful to wrap for python). It returns a NodeData class containing the attributes of the head of the chain. Like the getters above that is needed to save that data.

References mspass::utility::NodeData::algid, mspass::utility::NodeData::algorithm, mspass::utility::NodeData::stage, mspass::utility::NodeData::status, mspass::utility::NodeData::type, and mspass::utility::NodeData::uuid.

◆ get_nodes()

| multimap< string, NodeData > mspass::utility::ProcessingHistory::get_nodes | ( | ) | const |

Retrieve the nodes multimap that defines the tree stucture branches.

This method does more than just get the protected multimap called nodes. It copies the map and then pushes the "current" contents to the map before returning the copy. This allows the data defines as current to not be pushed into the tree until they are needed.

References is_empty().

◆ id()

|

inline |

Return the id of this object set for this history chain.

We maintain the uuid for a data object inside this class. This method fetches the string representation of the uuid of this data object.

◆ inputs()

| list< NodeData > mspass::utility::ProcessingHistory::inputs | ( | const std::string | id_to_find | ) | const |

Return a list of data that define the inputs to a give uuids.

This low level getter returns the NodeData objects that define the inputs to the uuid of some piece of data that was used as input at some stage for the current object.

- Parameters

-

id_to_find is the uuid for which input data is desired.

- Returns

- list of NodeData that define the inputs. Will silently return empty list if the key is not found.

◆ is_empty()

| bool mspass::utility::ProcessingHistory::is_empty | ( | ) | const |

Return true if the processing chain is empty.

This method provides a standard test for an invalid, empty processing chain. Constructors except the copy constructor will all put this object in an invalid state that will cause this method to return true. Only if the chain is initialized properly with a call to set_as_origin will this method return a false.

◆ is_origin()

| bool mspass::utility::ProcessingHistory::is_origin | ( | ) | const |

Return true if the current data is in state defined as "origin" - see class description

◆ is_raw()

| bool mspass::utility::ProcessingHistory::is_raw | ( | ) | const |

Return true if the current data is in state defined as "raw" - see class description

◆ is_saved()

| bool mspass::utility::ProcessingHistory::is_saved | ( | ) | const |

Return true if the current data is in state defined as "saved" - see class description

◆ is_volatile()

| bool mspass::utility::ProcessingHistory::is_volatile | ( | ) | const |

Return true if the current data is in state defined as "volatile" - see class description

◆ map_as_saved()

| string mspass::utility::ProcessingHistory::map_as_saved | ( | const std::string | alg, |

| const std::string | algid, | ||

| const AtomicType | typ | ||

| ) |

Prepare the current data for saving.

Saving data is treated as a special form of map operation. That is because a save by our definition is always a one-to-one operation with an index entry for each atomic object. This method pushes a new entry in the history chain tagged by the algorithm/algid field for the writer. It differs from new_map in the important sense that the uuid is not changed. The record this sets in the nodes multimap will then have the same uuid for the key as the that in NodeData. That along with the status set SAVED can be used downstream to recognize save records.

It is VERY IMPORTANT for use of this method to realize this method saves nothing. It only preps the history chain data so calls that follow will retrieve the right information to reconstruct the full history chain. Writers should follow this sequence:

- call map_as_saved with the writer name for algorithm definition

- save the data and history chain to MongoDB.

- be sure you have a copy of the uuid string of the data just saved and call the clear method.

- call the set_as_origin method using the uuid saved with the algorithm/id the same as used for earlier call to map_as_saved. This makes the put ProcessingHistory in a state identical to that produced by a reader.

- Parameters

-

alg is the algorithm names to assign to the ouput. This would normally be name defining the writer. algid is an id designator to uniquely define an instance of algorithm. Note that algid must itself be a unique keyword or the history chains will get scrambled. alg is mostly carried as baggage to make output more easily comprehended without additional lookups. Note one model to distinguish records of actual save and redefinition of the data as an origin (see above) is to use a different id for the call to map_as_saved and later call to set_as_origin. This code doesn't care, but that is an implementation detail in how this will work with MongoDB. typ defines the data type (C++ class) that was just saved.

References current_nodedata(), id(), is_empty(), and mspass::utility::ErrorLogger::log_error().

◆ merge()

| void mspass::utility::ProcessingHistory::merge | ( | const ProcessingHistory & | data_to_add | ) |

Merge the history nodes from another.

- Parameters

-

data_to_add is the ProcessingHistory of the data object to be merged.

References get_nodes(), id(), is_empty(), and mspass::utility::ErrorLogger::log_error().

◆ new_ensemble_process()

| string mspass::utility::ProcessingHistory::new_ensemble_process | ( | const std::string | alg, |

| const std::string | algid, | ||

| const AtomicType | typ, | ||

| const std::vector< ProcessingHistory * > | parents, | ||

| const bool | create_newid = true |

||

| ) |

Define history chain for an algorithm with multiple inputs in an ensemble.

Use this method to define the history chain for an algorithm that has multiple inputs for each output. Each output needs to call this method to build the connections that define how all inputs link to the the new data being created by the algorithm that calls this method. Use this method for map operators that have an ensemble object as input and a single data object as output. This method should be called in creation of the output object. If the algorthm builds multiple outputs to build an output ensemble call this method for each output before pushing it to the output ensemble container.

This method should not be used for a reduce operation in spark. It does not satisfy the associative rule for reduce. Use accumulate for reduce operations.

Normally, it makes sense to have the boolean create_newid true so it is guaranteed the current_id is unique. There is little cost in creating a new one if there is any doubt the current_id is not a duplicate. The false option is there only for rare cases where the current id value needs to be preserved.

Note the vector of data passed is raw pointers for efficiency to avoid excessive copying. For normal use this should not create memory leaks but make sure you don't try to free what the pointers point to or problems are guaranteed. It is VERY IMPORTANT to realize that all the pointers are presumed to point to the ProcessingHistory component of a set of larger data object (Seismogram or TimeSeries). The parents do not all have be a common type as if they have valid history data within them their current type will be defined.

This method ALWAYS marks the status as VOLATILE.

- Parameters

-

alg is the algorithm names to assign to the origin node. This would normally be name defining the algorithm that makes sense to a human. algid is an id designator to uniquely define an instance of algorithm. Note that algid must itself be a unique keyword or the history chains will get scrambled. alg is mostly carried as baggage to make output more easily comprehended without additional lookups. typ defines the data type (C++ class) the algorithm that is generating this data will create. create_newid is a boolean defining how the current id is handled. As described above, if true the method will call newid and set that as the current id of this data object. If false the current value is left intact.

- Returns

- a string representation of the uuid of the data to which this ProcessingHistory is now attached.

References clear(), get_nodes(), is_empty(), mspass::utility::ErrorLogger::log_error(), newid(), and mspass::utility::NodeData::stage.

◆ new_map() [1/2]

| std::string mspass::utility::ProcessingHistory::new_map | ( | const std::string | alg, |

| const std::string | algid, | ||

| const AtomicType | typ, | ||

| const ProcessingHistory & | data_to_clone, | ||

| const ProcessingStatus | newstatus = ProcessingStatus::VOLATILE |

||

| ) |

Define this algorithm as a one-to-one map.

Many algorithms define a one-to-one map where each one input data object creates one output data object. This class allows the input and output to be different data types requiring only that one input will map to one output. It differs from the overloaded method with fewer arguments in that it should be used if you need to clear and refresh the history chain for any reason. Known examples are creating simulation waveforms for testing within a workflow that have no prior history data loaded but which clone some properties of another piece of data. This method should be used in any situation where the history chain in the current data is wrong but the contents are the linked to some other process chain. It is supplied to cover odd cases, but use will likely be rare.

- Parameters

-

alg is the algorithm names to assign to the origin node. This would normally be name defining the algorithm that makes sense to a human. algid is an id designator to uniquely define an instance of algorithm. Note that algid must itself be a unique keyword or the history chains will get scrambled. alg is mostly carried as baggage to make output more easily comprehended without additional lookups. typ defines the data type (C++ class) the algorithm that is generating this data will create. data_to_clone is reference to the ProcessingHistory section of a parent data object that should be used to override the existing history chain. newstatus is how the status marking for the output. Normal (default) would be VOLATILE. This argument was included mainly for flexibility in case we wanted to extend the allowed entries in ProcessingStatus.

◆ new_map() [2/2]

| std::string mspass::utility::ProcessingHistory::new_map | ( | const std::string | alg, |

| const std::string | algid, | ||

| const AtomicType | typ, | ||

| const ProcessingStatus | newstatus = ProcessingStatus::VOLATILE |

||

| ) |

Define this algorithm as a one-to-one map of same type data.

Many algorithms define a one-to-one map where each one input data object creates one output data object. This (overloaded) version of this method is most appropriate when input and output are the same type and the history chain (ProcessingHistory) is what the new algorithm will alter to make the result when it finishes. Use the overloaded version with a separate ProcessingHistory copy if the current object's data are not correct. In this algorithm the chain for this algorithm is simply appended with new definitions.

- Parameters

-

alg is the algorithm names to assign to the origin node. This would normally be name defining the algorithm that makes sense to a human. algid is an id designator to uniquely define an instance of algorithm. Note that algid must itself be a unique keyword or the history chains will get scrambled. alg is mostly carried as baggage to make output more easily comprehended without additional lookups. typ defines the data type (C++ class) the algorithm that is generating this data will create. newstatus is how the status marking for the output. Normal (default) would be VOLATILE. This argument was included mainly for flexibility in case we wanted to extend the allowed entries in ProcessingStatus.

◆ newid()

| string mspass::utility::ProcessingHistory::newid | ( | ) |

Create a new id.

This creates a new uuid - how is an implementation detail but here we use boost's random number generator uuid generator that has some absurdly small probability of generating two equal ids. It returns the string representation of the id created.

◆ number_inputs() [1/2]

| int mspass::utility::ProcessingHistory::number_inputs | ( | ) | const |

Return the number of inputs used to create current data.

In a number of contexts it can be useful to know the number of inputs defined for the current object. This returns that count.

References number_inputs().

◆ number_inputs() [2/2]

| int mspass::utility::ProcessingHistory::number_inputs | ( | const std::string | uuidstr | ) | const |

Return the number of inputs defined for any data in the process chain.

This overloaded version of number_inputs asks for the number of inputs defined for an arbitrary uuid. This is useful only if backtracing the ancestory of a child.

- Parameters

-

uuidstr is the uuid string to check in the ancestory record.

◆ number_of_stages()

|

overridevirtual |

Return number of processing stages that have been applied to this object.

One might want to know how many processing steps have been previously applied to produce the current data. For linear algorithms that would be useful only in debugging, but for an iterative algorithm it can be essential to avoid infinite loops with a loop limit parameter. This method returns how many times something has been done to alter the associated data. It returns 0 if the data are raw.

Important note is that the number return is the number of processing steps since the last save. Because a save operation is assumed to save the history chain then flush it there is not easy way at present to keep track of the total number of stages. If we really need this functionality it could be easily retrofitted with another private variable that is not reset when the clear method is called.

Reimplemented from mspass::utility::BasicProcessingHistory.

◆ operator=()

| ProcessingHistory & mspass::utility::ProcessingHistory::operator= | ( | const ProcessingHistory & | parent | ) |

Assignment operator.

◆ set_as_origin()

| void mspass::utility::ProcessingHistory::set_as_origin | ( | const std::string | alg, |

| const std::string | algid, | ||

| const std::string | uuid, | ||

| const AtomicType | typ, | ||

| bool | define_as_raw = false |

||

| ) |

Set to define this as the top origin of a history chain.

This method should be called when a new object is created to initialize the history as an origin. Note again an origin may be raw but not all origins are define as raw. This interface controls that through the boolean define_as_raw (false by default). python wrappers should define an alternate set_as_raw method that calls this method with define_as_raw set true.

It is VERY IMPORTANT to realize that the uuid argument passed to this method is if fundamental importance. That string is assumed to be a uuid that can be linked to either a parent data object read from storage and/or linked to a history chain saved by a prior run. It becomes the current_id for the data to which this object is a parent. This method also always does two things that define how the contents can be used. current_stage is ALWAYS set 0. We distinguish a pure origin from an intermediate save ONLY by the status value saved in the history chain. That is, only uuids with status set to RAW are viewed as guaranteed to be stored. A record marked ORIGIN is assumed to passed through save operation. To retrieve the history chain from multiple runs the pieces have to be pieced together by history data stored in MongoDB.

The contents of the history data structures should be empty when this method is called. That would be the norm for any constructor except those that make a deep copy. If unsure the clear method should be called before this method is called. If it isn't empty it will be cleared anyway and a complaint message will be posted to elog.

- Parameters

-

alg is the algorithm names to assign to the origin node. This would normally be a reader name, but it could be a synthetic generator. algid is an id designator to uniquely define an instance of algorithm. Note that algid must itself be a unique keyword or the history chains will get scrambled. uuid unique if for this data object (see note above) typ defines the data type (C++ class) "this" points to. It might be possible to determine this dynamically, but a design choice was to only allow registered classes through this mechanism. i.e. the enum class typ implements has a finite number of C++ classes it accepts. The type must be a child ProcessingHistory. define_as_raw sets status as RAW if true and ORIGIN otherwise.

- Exceptions

-

Never throws an exception BUT this method will post a complaint to elog if the history data structures are not empty and it the clear method needs to be called internally.

References clear(), and mspass::utility::ErrorLogger::log_error().

◆ set_id()

| void mspass::utility::ProcessingHistory::set_id | ( | const std::string | newid | ) |

Set the uuid manually.

It may occasionally be necessary to create a uuid by some other mechanism. This allows that, but this method should be used with caution and only if you understand the consequences.

- Parameters

-

newid is string definition to use for the id.

References newid().

◆ stage()

|

inline |

Return the current stage count for this object.

We maintain a counter of the number of processing steps that have been applied to produce this data object. This simple method returns that counter. With this implementation this is identical to number_of_stages. We retain it in the API in the event we want to implement an accumulating counter.

◆ status()

|

inline |

Return the current status definition (an enum).

The documentation for this class was generated from the following files:

- /home/runner/work/mspass/mspass/cxx/include/mspass/utility/ProcessingHistory.h

- /home/runner/work/mspass/mspass/cxx/src/lib/utility/ProcessingHistory.cc