Data Object Design Concepts

Overview

TimeSeries and Seismogram respectively.The waveform data (normally the largest in size).

A generalization of the traditional concept of a trace header. These are accessible as simple name value pairs but the value can be anything. It is a bit like a python dictionary, but implemented with standard C++.

An error logger that provides a generic mechanism to post error messages in a parallel processing environment.

An optional object-level processing history mechanism.

Ensemble. The implementation in C++ uses a template to define a

generic ensemble. A limitation of the current capability to link C++

binary code with python is that templates do not translate directly.

Consequently, the python interface uses two different names to define

Ensembles of TimeSeries and Seismogram objects:

TimeSeriesEnsemble

and SeismogramEnsemble respectively.History

Make the API as generic as possible.

Use inheritance more effectively to make the class structure more easily extended to encapsulate different variations in seismic data.

Divorce the API completely from Antelope to achieve the full open source goals of MsPASS. Although not implemented at this time, the design will allow a version 2 of SEISPP in Antelope, although that is a “small matter of programming” that may never happen.

Eliminate unnecessary/extraneous constructors and methods developed by the authors as the class structure evolved organically over the years. In short, think through the core concepts more carefully and treat SEISPP as a prototype.

Extend the Metadata object (see below) to provide support for more types (objects) than the lowest common denominator of floats, ints, strings, and booleans handled by SEISPP.

Reduce the number of public attributes to make the code less prone to user errors. Note the word “reduce” not “eliminate” as many books advise. The general rule is simple parameter type attributes are only accessible through getters and putters while the normally larger main data components are public. That was done to improve performance and allow things like numpy operations on data vectors.

obspy handles what we call Metadata through set of python objects that have to be maintained separately and we think unnecessarily complicate the API. We aimed instead to simplify the management of Metadata as much as possible. Our goal was to make the system more like seismic reflection processing systems that manage the same problem through a simple namespace wherein metadata can be fetched with simple keys. We aimed to hide any hierarchic structures (e.g relational database table relationships, obspy object hierarchies, or MongoDB normalized data structures) behind the (python)

Schemaobject to reduce all Metadata to pure name:value pairs.obspy does not handle three component data in a native way, but mixes up the concepts we call

SeismogramandEnsemblein to a common python object they define as a Stream. We would argue our model is a more logical encapsulation of the concepts that define these ideas. For example, a collection of single component data like a seismic reflection shot gather is a very different thing than a set of three component channels that define the output of three sensors at a common point in space. Hence, we carefully separateTimeSeriesandSeismogram(our name for Three-Component data). We further distinguishEnsemblesof each atomic type.

Core Concepts

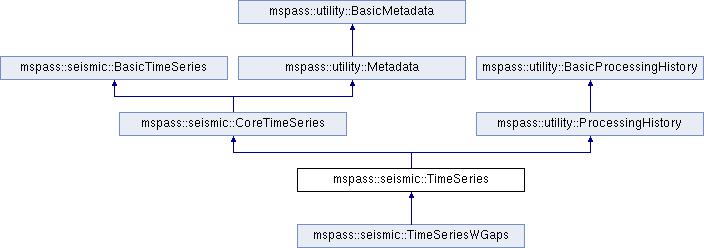

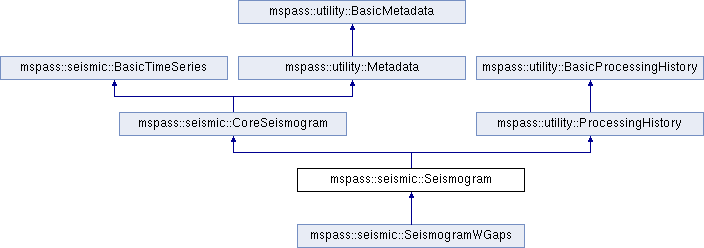

Overview - Inheritance Relationships

BasicTimeSeries,

BasicMetadata, and BasicProcessingHistory. Python supports multiple

inheritance and the wrappers make dynamic casting within the hierarchy

(mostly) automatic. e.g. a Seismogram object can be passed directly to a

python function that does only Metadata operations and it will be

handled seamlessly because python does not enforce type signatures on

functions. CoreTimeSeries and CoreSeismogram should be thought of a

defining core concepts independent from MsPASS. All MsPASS specific

components are inherited from ProcessingHistory. ProcessingHistory

implements two important concepts that were a design goal of MsPASS:

(a) a mechanism to preserve the processing history of a piece of data

to facilitate more reproducible science results, and (b) a parallel safe

error logging mechanism. A key point of the design of this class

hierarchy is that future users could chose to prune

the ProcessingHistory component and reuse CoreTimeSeries and

CoreSeismogram to build a

completely different framework.TimeSeries and Seismogram objects. The lower levels sometimes

but not always have python bindings.BasicTimeSeries - Base class of common data characteristics

This base class object can be best viewed as an answer to this questions: What is a time series? Our design answers this question by saying all time series data have the following elements:

We define a time series as data that has a fixed sample rate. Some people extend this definiion to arbitrary x-y data, but we view that as wrong. Standard textbooks on signal processing focus exclusively on uniformly sampled data. With that assumption the time of any sample is virtual and does not need to be stored. Hence, the base object has methods to convert sample numbers to time and the inverse (time to sample number).

Data processing always requires the time series have a finite length. Hence, our definition of a time series directly supports windowed data of a specific length. The getter for this attribute is

npts()and the setter isset_npts(int). This definition does not preclude an extension to modern continuous data sets that are too large to fit in memory, but that is an extension we don’t currently support.We assume the data has been cleaned and lacks data gaps. Real continuous data today nearly always have gaps at a range of scale created by a range of possible problems that create gaps: telemetry gaps, power failures, instrument failures, time tears, and with older instruments data gaps created by station servicing. MsPASS has stub API definitions for data with gaps, but these are currently not implemented. Since the main goal of MsPASS is to provide a framework for efficient processing of large data sets, we pass the job of finding and/or fixing data gaps to other packages or algorithms using MsPASS with a “when in doubt throw it out” approach to editing. The machinery to handle gap processing exists in both obpsy and Antelope and provide possible path to solution for users needing more extensive gap processing functionality.

live() and dead() respectively) and

setters to force live (set_live()) or dead (kill()). An important

thing to note is that an algorithm should always test if a data object

is defined as live. Some algorithms may choose to simply pass data marked

dead along without changing or removing it from the workflow.

Failure to test for the live condition can cause mysterious aborts when

an algorithm attempts to process invalid data.Handling Time

When tref is TimeReferenceType::Relative (TimeReferenceType.Relative in python) the computed times are some relatively small number from some well defined time mark. The most common relative standard is the implicit time standard used in all seismic reflection data: shot time. SAC users will recognize this idea as the case when IZTYPE==IO. Another important one used in MsPASS is an arrival time reference, which is a generalization of the case in SAC with IZTYPE==IA or ITn. We intentionally do not limit what this standard actually defines as how the data are handled depends only on the choice of UTC versus Relative. The ASSUMPTION is that if an algorithm needs to know the answer to the question, “Relative to what?”, that detail will be defined in a Metadata attribute.

When tref is TimeReferenceType::UTC (TimeReferenceType.UTC in python) all times are assumed to be an absolute time standard defined by coordinated universal time (UTC). We follow the approach used in Antelope and store ALL times defined as UTC with unix epoch times. We use this simple approach for two reasons: (1) storage (times can be stored as a simple double precision (64 bit float) field), and (2) efficiency (computing relative times is trivial compared to handling calendar data). This is in contrast to obspy which require ALL start times (t0 in mspass data objects) be defined by a python class they call UTCDateTime. Since MsPASS is linked to obspy we recommend you use the UTCDateTime class in python if you need to convert from epoch times to one of the calendar structures UTCDateTime can handle.

Metadata Concepts

Metadata object is best thought of through either of two

concepts well known to most seismologists: (1) headers (SAC), and (2)

a dictionary container in python. Both are ways to handle a general,

modern concept of

metadata commonly defined

as “data that provides information about data”. Packages like SAC use

fixed (usually binary fields) slots in an external data format to

define a finite set of attributes with a fixed namespace. obspy uses

a python dictionary like container they call

Stats

to store comparable information. That approach allows metadata

attributes to be extracted from a flexible container addressable by a

key word and that can contain any valid data. For example, a typical

obspy script will contain a line like the following to fetch the station

name from a Trace object d.sta=d.Stats["station"]

d={'time':10}

type(d['time'])

Within an individual application managing the namespace of attributes and type associations should be as flexible as possible to facilitate adapting legacy code to MsPASS. We provide a flexible aliasing method to map between attribute namespaces to make this possible. Any such application, however, must exercise care in any alias mapping to avoid type mismatch. We expect such mapping would normally be done in python wrappers.

Attributes stored in the database should have predictable types whenever possible. We use a python class called Schema described below to manage the attribute namespace is a way that is not especially heavy handed. Details are given below when we discuss the database readers and writers.

Care with type is most important in interactions with C/C++ and FORTRAN implementations. Pure python code can be pretty loose on type at the cost of efficiency. Python is thus the language of choice for working out a prototype, but when bottlenecks are found key sections may need to be implemented in a compiled language. In that case, the Schema rules provide a sanity check to reduce the odds of problems with type mismatches.

# Assume d is a Seismogram or TimeSeries which automatically casts to a Metadata in the python API use here

x=d.get_double("t0_shift") # example fetching a floating point number - here a time shift

n=d.get_int("evid") # example feching integer - here an event id

s=d.get_string("sta") # example fetching a UTF-8 string

b=d.get_bool("LPSPOL") # boolean for positive polarity used in SAC

d.put_double("t0_shift",x)

d.put_int("evid",n)

d.put_string("sta",s)

d.put_bool("LPSPOL",True)

d.put("names",name_list)

# or using a decorator defined in MsPASS

d["names"]=name_list

x=d.get("names")

# or using a decorator defined in MsPASS

x=d["names"]

mspass::utility::Metadata object

can be constructed directly from a python dict. That is used, for example,

in MongoDB database readers because a MongoDB “document” is returned as a

python dict in MongoDB’s python API.Managing Metadata type with mspasspy.db.Schema

Schema class is automatically loaded with the Database class

that acts as a handle to interact with MongoDB. Here we only document

methods available in the Schema class and discuss how these

can be used in developing a workflow with MsPASS.Schema is a subclass with the

name MetadataSchema. You can create an instance easily like this:from mspasspy.db.schema import MetadataSchema schema=MetadataSchema()

MetadataSchema currently has two main definitions that can be extracted

from the class as follows:mdseis=schema.Seismogram mdts=schema.TimeSeries

mdseis and mdts symbols above are instances of

a the MDSchemaDefinition class described here.mdseis and mdts

objects contain methods that can be used to get a list of restricted symbols

(the keys() method), the type that the framework expects that symbol

to define (the type() method), and a set of other utility methods.alias. We leave the exercise

of understanding how to use this feature to planned tutorials.Scalar versus 3C data

time method in BasicTimeSeries

can be used to convert an index to a time defined as a double). Note in mspass

the integer index always uses the C convention starting at 0 and not 1 as in FORTRAN,

linear algebra, and many signal processing books.

We use a C++ standard template library vector

container to

hold the sample data accessible through the public variable s in the C++ api. The

python API makes the vector container look like a numpy array that can

be accessed in same way sample data are handled in an obspy Trace

object in the “data” array. It is important to note that the C++ s vector is

mapped to data in the python API. The direct interface through numpy/scipy

allows one to manipulate sample data with numpy or scipy functions (e.g. simple bandpass

filters).

That can be useful for testing and prototyping but converting and algorithm

to a parallel form requires additional steps described here (LINK to parallel

section)Most modern seismic reflection systems provide some support for three-component data. In reflection processing scalar, multichannel raw data are often treated conceptually as a matrix with one array dimension defining the time variable and the other index defined by the channel number. When three component data are recorded the component orientation can be defined implicitly by a component index number. A 3C shot gather than can be indexed conveniently with three array indexes. A complication in that approach is that which index is used for which of the three concept required for a gather of 3C data is not standarized. Furthermore, for a generic system like mspass the multichannel model does not map cleanly into passive array data because a collection of 3C seismograms may have irregular size, may have variable sample rates, and may come from variable instrumentation. Hence, a simple matrix or array model would be very limiting and is known to create some cumbersome constructs.

Traditional multichannel data processing emerged from a world were instruments used synchronous time sampling. Seismic reflection processing always assumes during processing that time computed from sample numbers is accurate to within one sample. Furthermore, the stock assumption is that all data have sample 0 at shot time. That assumption is a necessary condition for the conceptual model of a matrix as a mathematical representation of scalar, multichannel data to be valid. That assumption is not necessarily true (in fact it is extremely restrictive if is required) in passive array data and raw processing requires efforts to make sure the time of all samples can be computed accurately and time aligned. Alignment for a single station is normally automatic although some instruments have measurable, constant phase lags at the single sample level. The bigger issue for all modern data is that the raw data are rarely stored in a multiplexed multichannel format, although the SEED format allows that. Most passive array data streams have multiple channels stored as compressed miniSEED packets that have to be unpacked and inserted into something like a vector container to be handled easily by a processing program. The process becomes more complicated for three-component data because at least three channels have to be manipulated and time aligned. The obspy package handles this issue by defining a Stream object that is a container of single channel Trace objects. They handle three component data as Stream objects with exactly three members in the container.

Seismogram. The data are directly accessible in C++ through a public

variable called u that is mnemonic for the standard symbol used in the

old testament of seismology by Aki and Richards. In python we use the

symbol data for consistency with TimeSeries.

There are two choices of the order of indices for this matrix.

The MsPASS implementation makes this choice: a Seismogram

defines index 0(1) as the channel number and index 1(2) as the time

index. The following python code section illustrates this more

clearly than any words:from mspasspy.ccore.seismic import Seismogram

d = Seismogram(100) # Create an empty Seismogram with storage for 100 time steps initialized to all zeros

d.data[0,50] = 1.0 # Create a delta function at time t0+dt*50 in component 0

data matrix with all

the tools of numpy noting that the data are in what numpy calls FORTRAN order.components_are_orthogonal is true after any sequence of orthogonal

transformations and components_are_cardinal is true when the

components are in the standard ENZ directions.The process of creating a Seismogram from a set of TimeSeries objects in a robust way is not trivial. Real data issues create a great deal of complexity to that conversion process. Issues include: (a) data with a bad channel that have to be discarded, (b) stations with multiple sensors that have to be sorted out, (c) stations with multiple sample rates (nearly universal with modern data) that cannot be merged, (d) data gaps that render one or more components of the set incomplete, and (e) others we haven’t remembered or which will appear with some future instrumentation. To handle this problem we have a module in MsPASS called

bundledocumented here(THIS NEEDS A LINK - wrote this when bundle had not yet been merged).

template <typename Tdata> class Ensemble : public Metadata

{

public:

vector<Tdata> member;

// ...

Tdata& operator[](const int n) const

// ...

}

n=d.member.size()

for i in range(n):

somefunction(d.member[i]) # pass member i to somefunction

The wrappers also make the ensemble members “iterable”. Hence the above block could also be written:

for x in d.member:

somefunction(x)

ProcessingHistory and Error Logging

Core versus Top-level Data Objects

TimeSeries and Seismogram objects extend their

“core” parents by adding two classes:ProcessingHistory, as the name implies, can (optionally) store the a complete record of the chain of processing steps applied to a data object to put it in it’s current state. The complete history has two completely different components described in more detail elsewhere in this User’s Manual: (a) global job information designed to allow extracting the full instance of the job stream under which a given data object was produced, and (b) a chain of parent waveforms and algorithms that modified them to get the data in the current state. Maintaining processing history is a complicated process that can lead to memory bloat in complex processing if not managed carefully. For this reason this feature is off by default. Our design objective was to treat object level history as a final step to create a reproducible final product. That would be most appropriate for published data to provide a mechanism for others to reproduce your work, archival data to allow you or others in your group to start up where you left off, or just for a temporary archive to preserve what you did.ErrorLoggeris an error logging object. The purpose of the error logger is to maintain a log of any errors or informative messages created during the processing of the data. All processing modules in MsPASS are designed with global error handlers so that they should never abort, but in worst case post a log message that tags a fatal error. (Note if any properly structured mspass enabled processing function throws an exception and aborts it has encountered a bug that needs to b reported to the authors.) In our design we considered making the ErrorLogger a base class for Seismogram and TimeSeries, but it does not satisfy the basic rule of making a concept a base class if the child “is a” ErrorLogger. It does, however, perfectly satisfy the idea that the object “has an” ErrorLogger. BothTimeSeriesandSeismogramuse the symbolelogas the name for the ErrorLogger object (e.g. If d is aSeismogramobject, d.elog, would refer to the error logger component of d.)’’

Object Level History Design Concepts

As summarized above the concept we wanted to capture in the history mechanism was a means to preserve the chain of processing events that were applied to get a piece of data in a current state. Our design assumes the history can be described by an inverted tree structure. That is, most workflows would merge many pieces of data (a reduce operation in map-reduce) to produce a given output. The process chain could then be viewed as tree growth with time running backward. The leaves are the data sources. Each growth season is one processing stage. As time moves forward the tree shrinks from many branches to a single trunk that is the current data state. The structure we use, however, is more flexible than real tree growth. Many-to-many mixes of data will produce a tree that does not look at all like the plant forms of nature, but we hope the notion of growth seasons, branch, and trees is useful to help understand how this works.

To reconstruct the steps applied to data to produce an output the following foundational data is required:

We need to associate the top of the inverted tree (the leaves) that are the parent data to the workflow. For seismic data that means the parent time series data extracted from a data center with web services or assembled and indexed on local, random access (i.e. MsPASS knows nothing about magnetic tapes) storage media.

MsPASS assumes all algorithms can be reduced to the equivalent of an abstraction of a function call. We assume the algorithm takes input data of one standard type and emits data of the same or different standard type. (“type” in this context means TimeSeries, Seismogram, or an obspy Trace object) The history mechanism is designed to preserve what the primary input and output types are.

Most algorithms have one to a large number of tunable parameters that determine their behavior. The history needs to preserve the full parametric information to reproduce the original behavior.

The same algorithm may be run with different parameters and behave very differently (e.g. a bandpass filter with different passbands). The history mechanism needs to distinguish these different instances while linking them to the same parent processing algorithm.

Some algorithms (e.g. what is commonly called a stacker in seismic reflection processing) merge many pieces of data to produce one or more outputs. A CMP stacker, for example, would take an array of normal moveout corrected data and average them sample-by-sample to produce one output for each gather passed to the processor. This is a many to one reducer. There are more complicate examples like the plane wave decomposition both Wang and Pavlis developed in the mid 2010s. That algorithm takes full event gathers, which for USArray could have thousands of seismograms, as inputs, and produces an output of many seismograms that are approximate plane wave components at a set of “pseudostation” points. The details of that algorithm are not the point, but it is a type example of a reducer that is a many-to-many operation. The history mechanism must be able to describe all forms of input and output from one-to-one to many-to-many.

Data have an origin that is assumed to be reproducible (e.g. download from a data center) but during processing intermediate results are by definition volatile. Intermediate saves of final results need to be defined by some mechanism to show the result were saved at that stage. The final result needs a way to verify it was successfully saved to storage.

Although saving intermediate results is frequently necessary, the process of saving the data must not break the full history chain.

The history mechanism must work for any normal logical branching and looping scenario possible with a python script.

Naive preservation of history data could cause a huge overload in memory usage and processing time. The design then needs to make the implementation as lightweight in memory and computational overhead as possible. The implementation needs to minimize memory usage as some algorithms require other inputs that are not small. Notably, the API was designd to support input that could be described by any python class. The a key concept is that our definition of “parameters” is broader than just a set of numbers. It means any data that is not one of the atomic types (currently TimeSeries and Seismogram objects) is considered a parameter.

A more subtle feature of the schedules supported in MsPASS for parallel processing is that data objects need to be serializable. For python programmers that is synonymous with “pickleable”. The most common G-tree algorithms we know of use linked lists of pointers to store the information we use describe object-level history. A different mechanism is needed that is an implementation detail described in the detailed section on

ProcessingHistory.

Error Logging Concepts

ErrorLogger object. Any

data processing module in MsPASS should NEVER exit on any error

condition except one from which the operating system cannot recover.

(e.g. a disk write error or memory allocation error)

All C++ and python processing modules need to have appropriate error

handlers (i.e. try/catch in C++ and raise/except in python) to keep a

single error from prematurely killing a large processing job. We

recommend all error handlers in processing functions post a message

that can help debug the error. Error messages should be registered

with the data object’s elog object. Error messages should not

normally be just posted to stdout (i.e. print in python) for two

reasons. First, stream io is not thread safe and garbled output is

nearly guaranteed unless the log message are rare. Second, with a

large dataset it can become a nearly impossible to find out which

pieces of data created the errors. Proper application of the

ErrorLogger object will eliminate both of these problems.try:

d.rotate_to_standard()

d.elog.log_verbose(alg,"rotate_to_standard succeed for me")

# ...

except MsPASSError as err:

d.elog.log_error(d.job_id(),alg,err)

d.kill()

Seismogram

object with a singular transformation matrix created, for example, by

incorrectly building the object with two redundant east-west

components. The rotate_to_standard method tries to compute a matrix

inverse of the transformation matrix, which will generate an

exception of type MsPASSError (the primary exception class for MsPASSS).

This example catches that exception with the expected type and passes it

directly to the ErrorLogger (d.elog). This form is correct because it

is documented that that is the only class exception the function will throw.

For more ambiguous cases we refer to multiple books and online sources

for best practices in python programming. The key point is in more

ambiguous cases the construct should catch the standard base

class Exception as a more generic handler. Finally, both calls to elog

methods contain additional parameters to tag the messages. alg is an

algorithm name and d.job_id() retrieves the job_id. Both are

global attributes handled through the global history management

system described in more detail in a separate section of this manual.